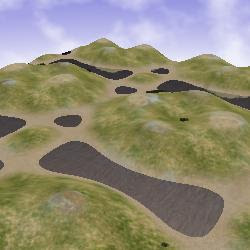

The lesson starts with

step 3 of Riemer's advanced terrain tutorial. Here multiple textures are introduced (we now have grass,rock,snow and sand). In order to get a smooth texture transition, each vertex carries a weight for each the four textures. The weight are calculates using fuzzy logic (rockiness, snowiness and so on). The values are normalised to have a total of one. The weights are stored in a

Vector4 using

TEXCOORD3 HLSL semantics. Each vector component (X,Y,Z and W) is used to stored the weight of the respective textures. In the pixel shader the output colour is determined by adding up the weighted values from the four texture samplers.

Step 4 is about the level of detail (or LOD) problem. Essentially the size of the texture must be bigger (i.e. more detailed) when viewed up close -- and smaller when viewed from a distance. The solution is to get the distance from the camera (i.e. the depth of a pixel). The solution is to get a blend factor (float from zero to one) determined by the depth. When this factor is zero, near texture coordinates are used, when its far, the normal tex coords are used. The near texture coordinates is a magnification of the normal coordinates. Linear interpolation (lerp) chooses the actual magnification level. All this is done in the shader code. Very neat.

The next step is about setting up a sky dome. This code was simple to implement as a new component called

RiemersSkyDome. The sky dome must be drawn before the terrain, and it also needs access to the

RiemersCamera instance. The sky dome itself is a model (.X file), and the effects in the model is imply replaced with a clone of Riemers customer effect. The clone is important because this effect has its own values for the effect parameters.

In step 6, Riemer explains the overview of creating water. Essentially, the reflection (from above) is combined with the refraction from below to determine the pixel colour. Then a ripple is added using a

Fresnel method. As a final modification, some "dirtiness" is added to get a realistic effect.

Step 7 starts off with the refraction map. Here again, I created a component called a

RefractionMap The basic idea is that the part of the scene that is below the surface of the water is drawn to a

Texture2D This is done by setting a clip plane -- only pixels above the clip plane is drawn. The tricky part is to create the place based on the current camera orientation. For the component I created a collection property called

RenderedComponents. The user of the component adds all the elements to this collection he wants refracted. For now I only added the terrain to this collection.

In step 8, the same technique as above is used to create a reflection map. After creating a base class for

RefractionMap, I created another control of the same kind called

ReflectionMap. The problem here is to "extract" the reflected image to a texture.

In the next step a mirror effect is created. Much of the code comes from the HLSL tutorials. Form a component viewpoint, the normal XNA draw method is not enough for the

ReflectionMap to use. For reflection, the draw must be done via from a reflection matrix view; so the interface of components must be extended to contain a

void Draw(Matrix viewMatrix).

This concludes the lesson. We have a terrain with perfect mirrored surfaces for water. And some interesting new components.

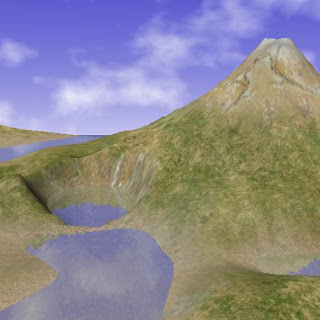

I am thinking about volcanoes and craters. A volcano is a cone shaped mountain with a hole in the middle. The cone surface is made less smooth with randomised adjustments. Although a crater is simply a large hole with an elevated rim at its edge; it gets slightly complicated when you consider that the rim must fit nicely with the original landscape.

I am thinking about volcanoes and craters. A volcano is a cone shaped mountain with a hole in the middle. The cone surface is made less smooth with randomised adjustments. Although a crater is simply a large hole with an elevated rim at its edge; it gets slightly complicated when you consider that the rim must fit nicely with the original landscape.